The echoes of history

Sometimes, there are weird connections between very different cultural areas and we see the echoes of history playing out. I'm going to tell you how pulsars, Nobel Prizes, an iconic album cover, Nazi atrocities, and software chart plotting all came to be connected.

The discovery of pulsars

In 1967, Jocelyn Bell was working on her Ph.D. as a post-graduate researcher at the Mullard Radio Astronomy Observatory, near Cambridge in the UK. She had helped build a new radio telescope and now she was operating it. On November 28, 1967, she saw a strikingly unusual and regular signal, which the team nicknamed "little green men". The signal turned out to be a pulsar, a type of star new to science.

This was an outstanding discovery that shook up astronomy. The team published a paper in Nature, but that wasn't the end of it. In 1974, the Nobel committee awarded the Nobel Physics Prize to the team. To everyone on the team except Jocelyn Bell.

Over the years, there's been a lot of controversy over the decision, with many people thinking she was robbed of her share of the prize, either because she was a Ph.D. student or because she was a woman. Bell herself has been very gracious about the whole thing; she is indeed a very classy lady.

The pulsar and early computer graphics

In the late 1960s, a group of Ph.D. students from Cornell University were analyzing data from the pulsar Bell discovered. Among them was Harold Craft, who used early computer systems to visualize the results. Here's what he said to the Scientific American in 2015: "I found that it was just too confusing. So then, I wrote the program so that I would block out when a hill here was high enough, then the stuff behind it would stay hidden."

Here are three pages from Craft's Ph.D. thesis. Take a close look at the center plot. If Craft had made every line visible, it would have been very difficult to see what was going on. Craft re-imagined the data as if he were looking at it at an angle, for example, as if it were a mountain range ridgeline he was looking down on. With a mountain ridgeline, the taller peaks hide what's behind them. It was a simple idea, but very effective.

(Credit: JEN CHRISTIANSEN/HAROLD D. CRAFT)

The center plot is very striking. So striking in fact, that it found its way into the Cambridge Encyclopaedia of Astronomy (1977 edition):

(Cambridge Encyclopedia of Astronomy, 1977 edition, via Tim O'Riley)

Joy Division

England in the 1970s was not a happy place, especially in the de-industrialized north. Four young men in Manchester had formed a band and recorded an album. The story goes that one of them, Bernard Sumner, was working in central Manchester and took a break in the city library. He came across the pulsar image in the encyclopedia and liked it a lot.

The band needed an image for their debut album, so they selected this one. They gave it to a recently graduated designer called Peter Saville, with the instructions it was to be a black-on-white image. Saville felt the image would look better white-on-black, so he designed this cover.

This is the iconic Unknown Pleasures album from Joy Division.

The starkness of the cover, without the band's name or the album's name, set it apart. The album itself was critically acclaimed, but it never rose high in the charts at the time. However, over time, the iconic status of the band and the album cover grew. In 1980, the lead singer, Ian Curtis, committed suicide. The remaining band members formed a new band, New Order, that went on to massive international fame.

By the 21st century, versions of the album cover were on beach towels, shoes, and tattoos.

Joy plots

In 2017, Claus Wilke created a new charting library for R, ggjoy. His package enabled developers to create plots like the famous Unknown Pleasures album cover. In honor of the album cover, he called these plots joy plots.

Ridgeline plots

This story has a final twist to it. Although joy plots sound great, there's a problem.

Joy Division took their name from a real Nazi atrocity fictionalized in a book called House of Dolls. In some of their concentration camps, the Nazis forced women into prostitution. The camp brothels were called Joy Division.

The name joy plots was meant to be fun and a callback to an iconic data visualization, but there's little joy in evil. Given this history, Wilke renamed his package ggridges and the plots ridgeline plots.

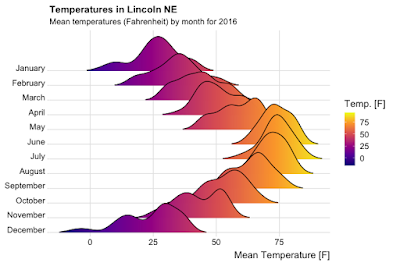

Here's an example of the great visualizations you can produce with it.

If you search around online, you can find people who've re-created the pulsar image using ggridges.

It's not just R programmers who are playing with Unknown Pleasures, Python programmers have got into the act too. Nicolas P. Rougier created a great animation based on the pulsar data set using the venerable Matplotlib plotting package - you can see the animation here.

If you liked this post

You might like these ones: