Normal is all around you, and so is not-normal

The normal distribution is the most important statistical distribution. In this blog post, I'm going to talk about its properties, where it occurs, and why it's so very important. I'm also going to talk about how using the normal distribution when you shouldn't can lead to disaster and what you can do about it.

A rose by any other name

The normal distribution has a number of different names in different disciplines:

- Normal distribution. This is the name used by statisticians and data scientists.

- Gaussian distribution. This is what physicists call it.

- The bell curve. The names used by social scientists and by people who don't understand statistics.

I'm going to call it the normal distribution in this blog post, and I'd advise you to call it this too. Even if you're not a data scientist, using the most appropriate name helps with communication.

What it is and what it looks like

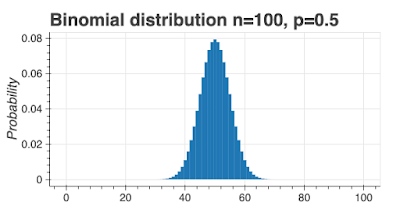

When we're measuring things in the real world, we see different values. For example, if we measure the heights of 10 year old boys in a town, we'd see some tall boys, some short boys, and most boys around the "average" height. We can work out what fraction of boys are a certain height and plot a chart of frequency on the y axis and height on the x axis. This gives us a probability or frequency distribution. There are many, many different types of probability distribution, but the normal distribution is the most important.

(As an aside, you may remember making histograms at school. These are "sort-of" probability distributions. For example, you might have recorded the height of all the children in a class, grouped them into height ranges, counted the number of children in each height range, and plotted the chart. The y axis would have been a count of how many children in that height range. To turn this into a probability distribution, the y axis would become the fraction of all children in that height range. )

Here's what a normal probability distribution looks like. Yes, it's the classic bell curve shape which is exactly symmetrical.

The formula describing the curve is quite complex, but all you need to know for now is that it's described by two numbers: the mean (often written \(\mu\)) and a standard deviation (often written \(\sigma\)). The mean tells you where the peak is and the standard deviation gives you a measure of the width of the curve.

To greatly summarize: values near the mean are the most likely to occur and the further you go from the mean, the less likely they are. This lines up with our boys' heights example: there aren't many very short or very tall boys and most boys are around the mean height.

Obviously, if you change the mean or the standard deviation, you change the curve, for example, you can change the location of the mean or you can make the curve wider or narrower. It turns out changing the mean and standard deviation just scales the curve because of its underlying mathematical properties. Most distributions don't behave like this; changing parameters can greatly change the entire shape of the distribution (for example, the beta distribution wildly changes shape if you change its parameters). The normal scaling property has some profound consequences, but for now, I'll just focus on one. We can easily map all normal distributions to one standard normal distribution. Because the properties of the standard normal are known, we can easily do math on the standard normal. To put it another way, it greatly speeds up what we need to do.

Why the normal distribution is so important

Here are some normal distribution examples from the real world.

Let's say you're producing precision bolts. You need to supply 1,000 bolts of a precise specification to a customer. Your production process has some variability. How many bolts do you need to manufacture to get 1,000 good ones? If you can describe the variability using a normal distribution (which is the case for many manufacturing processes), you can work out how many you need to produce.

Imagine you're outfitting an army and you're buying boots. You want to buy the minimum number of boots while still fitting everyone. You know that many body dimensions follow the normal distribution (most famously, chest circumference), so you can make a good estimate of how many boots of different sizes to buy.

Finally, let's say you've bought some random stocks. What might the daily change in value be? Under usual conditions, the change in value follows a normal distribution, so you can estimate what your portfolio might be worth tomorrow.

It's not just these three examples, many phenomena in different disciplines are well described by the normal distribution.

The normal distribution is also common because of something called the central limit theorem (CLT). Let's say I'm taking measurement samples from a population, e.g. measuring the speed of cars on a freeway. The CLT says that the distribution of the sample means will follow a normal distribution regardless of the underlying distribution. In the car speed example, I don't know how the speeds are distributed, but I can calculate a mean and know how certain I am that the mean value is the true (population) mean. This sounds a bit abstract but it has profound consequences in statistics and means that normal distribution comes up time and time again.

Finally, it's important because it's so well-known. The math to describe and use the normal distribution has been known for centuries. It's been written about in hundreds of textbooks in different languages. More importantly, it's very widely taught; almost all numerate degrees will cover it and how to use it.

Let's summarize why it's important:

- It comes up in nature, in finance, in manufacturing etc.

- It comes up because of the CLT.

- The math to use it is standardized and well-known.

What useful things can I do with the normal distribution?

Let's take an example from the insurance world. Imagine an insurance company insures house contents and cars. Now imagine the claim distribution for cars follows a normal distribution and the claims distribution for house contents also follows a normal distribution. Let's say in a typical year the claims distributions look something like this (cars on the left, houses on the right).

(The two charts look identical except for the numbers on the x and y axis. That's expected. I said before that all normal distributions are just scaled versions of the standard normal. Another way of saying this is, all normal distribution plots look the same.)

What does the distribution look like for cars plus houses?

The long winded answer is to use convolution (or even Monte Carlo). But because the house and car distribution are normal, we can just do:

So we can calculate the combined distribution in a heartbeat. The combined distribution looks like this (another normal distribution, just with a different mean and standard deviation).

To be clear: this only works because the two distributions were normal.

It's not just adding distributions together. The normal distribution allows for shortcuts if we're multiplying or dividing etc. The normal distribution makes things that would otherwise be hard very fast and very easy.

Some properties of the normal distribution

I'm not going to dig into the math here, but I am going to point out a few things about the distribution you should be aware of.

The "standard normal" distribution goes from \(-\infty\) to \(+\infty\). The further away you get from the mean, the lower the probability, and once you go several standard deviations away, the probability is quite small, but never-the-less, it's still present. Of course, you can't show \(\infty\) on a chart, so most people cut off the x-axis at some convenient point. This might give the misleading impression that there's an upper or lower x-value; there isn't. If your data has upper or lower cut-off values, be very careful modeling it using a normal distribution. In this case, you should investigate other distributions like the truncated normal.

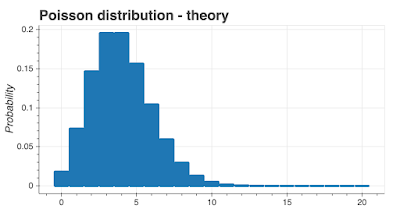

The normal distribution models continuous variables, e.g. variables like speed or height that can have any number of decimal places (but see the my previous paragraph on \(\infty\)). However, it's often used to model discrete variables (e.g. number of sheep, number of runs scored, etc.). In practice, this is mostly OK, but again, I suggest caution.

Abuses of the normal distribution and what you can do

Because it's so widely known and so simple to use, people have used it where they really shouldn't. There's a temptation to assume the normal when you really don't know what the underlying distribution is. That can lead to disaster.

In the financial markets, people have used the normal distribution to predict day-to-day variability. The normal distribution predicts that large changes will occur with very low probability; these are often called "black swan events". However, if the distribution isn't normal, "black swan events" can occur far more frequently than the normal distribution would predict. The reality is, financial market distributions are often not normal. This creates opportunities and risks. The assumption of normality has lead to bankruptcies.

Assuming normality can lead to models making weird or impossible predictions. Let's say I assume the numbers of units sold for a product is normally distributed. Using previous years' sales, I forecast unit sales next year to be 1,000 units with a standard deviation of 500 units. I then create a Monte Carlo model to forecast next years' profits. Can you see what can go wrong here? Monte Carlo modeling uses random numbers. In the sales forecast example, there's a 2.28% chance the model will select a negative sales number which is clearly impossible. Given that Monte Carlo models often use tens of thousands of simulations, it's extremely likely the final calculation will have been affected by impossible numbers. This kind of mistake is insidious and hard to spot and even experienced analysts make it.

If you're a manager, you need to understand how your team has modeled data.

- Ask what distributions they've used to model their data.

- Ask them why they've used that distribution and what evidence they have that the data really is distributed that way.

- Ask them how they're going to check their assumptions.

- Most importantly, ask them if they have any detection mechanism in place to check for deviation from their expected distribution.

History - where the normal came from

Rather unsatisfactorily, there's no clear "Eureka!" moment for the discovery of the distribution, it seems to have been the accumulation of the work of a number of mathematicians. Abraham de Moivre kicked off the process in 1733 but didn't formalize the distribution, leaving Gauss to explicitly describe it in 1801 [https://medium.com/@will.a.sundstrom/the-origins-of-the-normal-distribution-f64e1575de29].

Gauss used the normal distribution to model measurement errors and so predict the path of the asteroid Ceres [https://en.wikipedia.org/wiki/Normal_distribution#History]. This sounds a bit esoteric, but there's a point here that's still relevant. Any measurement taking process involves some form of error. Assuming no systemic bias, these errors are well-modeled by the normal distribution. So any unbiased measurement taking today (e.g opinion polling, measurements of particle mass, measurement of precision bolts, etc.) uses the normal distribution to calculate uncertainty.

In 1810, Laplace placed the normal distribution at the center of statistics by formulating the Central Limit Theorem.

The math

The probability distribution function is given by:

\[f(x) = \frac{1}{\sigma \sqrt{2 \pi}} e ^ {-\frac{1}{2} ( \frac{x - \mu}{\sigma}) ^ 2 }\]

\(\sigma\) is the standard deviation and \(\mu\) is the mean. In the normal distribution, the mean is the same as the mode is the same as the median.

This formula is almost impossible to work with directly, but you don't need to. There are extensive libraries that will do all the calculations for you.

Adding normally distributed parameters is easy:

.png)

.png)