Simpson's paradox can mess up your business

Let's imagine you're the Chief Revenue Officer at a manufacturing company that sells tubes and cylinders. You're having trouble with European sales reps discounting, so you offer a spif: the country team that sells at the highest price gets a week-long vacation somewhere warm and sunny with free food and drink. The Italian and German sales teams are raring to go.

At the end of the quarter, you have these results [Wang]:

| Product type | ||||

| Cylinder | Tube | |||

| Sales team | No sales | Average price | No sales | Average price |

| German | 80 | €100 | 20 | €70 |

| Italian | 20 | €120 | 80 | €80 |

This looks like a clear victory for the Italians! They maintained a higher price for both cylinders and tubes! If they have a higher price for every item, then obviously, they've won. The Italians start packing their swimsuits.

Not so fast, say the Germans, let's look at the overall results.

| Sales team | Average price |

| German | €94 |

| Italian | €88 |

Despite having a lower selling price for both cylinders and tubes, the Germans have maintained a higher selling price overall!

How did this happen? It's an instance of Simpon's paradox.

Why the results reversed

Here's how this happened: the Germans sold more of the expensive cylinders and the Italians sold more of the cheaper tubes. The average price is the ratio of the total monetary amount/total sales quantity. To put it very simply, ratios (prices) can behave oddly.

Let's look at a plot of the selling prices for the Germans and Italians.

The blue circles are tubes and the orange circles are cylinders. The size of the circles represents the number of sales. The little red dot in the center of the circles is the price.

Let's look at cylinders. Plainly, the Italians sold them at a higher price, but they're the most expensive item and the Germans sold more of them. Now, let's look at tubes, once again, the Italians sold them at a higher price than the Germans, but they're cheaper than cylinders and the Italians sold more of them.

You can probably see where this is going. Because the Italians sold more of the cheaper items, their average (or pooled) price is dragged down, despite maintaining a higher price on a per-item basis. I've re-drawn the chart, but this time I've added a horizontal black line that represents the average.

The product type (cylinders or tubes) is known in statistics as a confounder because it confounds the results. It's also known as a conditioning variable.

A disturbing example - does this drug work?

The sales example is simple and you can see the cause of the trouble immediately. Let's look at some data from a (pretend) clinical trial.

Imagine there's some disease that impacts men and women and that some people get better on their own without any treatment at all. Now let's imagine we have a drug that might improve patient outcomes. Here's the data [Lindley].

| Female | Male | |||||

| Recovered | Not Recovered | Rate | Recovered | Not Recovered | Rate | |

| Took drug | 8 | 2 | 80% | 12 | 18 | 40% |

| Not take drug | 21 | 9 | 70% | 3 | 7 | 30% |

Wow! The drug gives everyone an added 10% on their recovery rate. Surely we need to prescribe this for everyone? Let's have a look at the overall data.

| Everyone | |||

| Recovered | Not Recovered | Rate | |

| Took drug | 20 | 20 | 50% |

| Not take drug | 24 | 16 | 60% |

What this data is saying is, the drug reduces the recovery rate by 10%.

Let me say this again.

- For men, the drug improves recovery by 10%.

- For women, the drug improves recovery by 10%.

- For everyone, the drug reduces recovery by 10%.

If I'm a clinician, and I know you have the disease, if you're a woman, I would recommend you take the drug, if you're a man I would recommend you take the drug, but if I don't know your gender, I would advise you not to take the drug. What!!!!!

This is exactly the same math as the sales example I gave you above. The explanation is the same. The only thing different is the words I'm using and the context.

Simpson and COVID

In the United States, it's pretty well-established that black and Hispanic people have suffered disproportionately from COVID. Not only is their risk of getting COVID higher, but their health outcomes are worse too. This has been extensively covered in the press and on the TV news.

In the middle of 2020, the CDC published data that showed fatality rates by race/ethnicity. The fatality rate means the fraction of patients with COVID who die. The data showed a clear result: white people had the worst fatality rate of the racial groups they studied.

Doesn't this contradict the press stories?

No.

There are three factors at work:

- The fatality rate increases with age for all ethnic groups. It's much higher for older people (75+) than younger people.

- The white population is older than the black and Hispanic populations.

- Whites have lower fatality rates in almost all age groups.

This is exactly the same as the German and Italian sales team example I started with. As a fraction of their population, there are more old white people than old black and Hispanic people, so the fatality rates for the white population are dominated by the older age group in a way that doesn't happen for blacks and Hispanics.

In this case, the overall numbers are highly misleading and the more meaningful comparison is at the age-group level. Mathematically, we can remove the effect of different demographics to make an apples-to-apples comparison of fatality rates, and that's what the CDC has done.

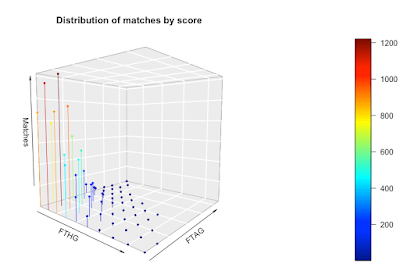

In pictures

Wikipedia has a nice article on Simpson's paradox and I particularly like the animation that's used to accompany it, so I'm copying it here.

Each of the dots represents a measurement, for example, it could be price. The colors represent categories, for example, German or Italian sales teams, etc. if we look at the results overall, the trend is negative (shown by the black dots and black line). If we look at the individual categories, the trend is positive (colors). In other words, the aggregation reverses the individual trends.

The classic example - sex discrimination at Berkeley

The Simpson's paradox example that's nearly always quoted is the Berkeley sex discrimination case [Bickel]. I'm not going to quote it here for two reasons: it's thoroughly discussed elsewhere, and the presentation of the results can be confusing. I've stuck to simpler examples to make my point.

American politics

A version of Simpson's paradox can occur in American presidential elections, and it very nicely illustrates the cause of the problem.

In 2016, Hilary Clinton won the popular vote by 48.2% to 46.1%, but Donald Trump won the electoral college by 304 to 227. The reason for the reversal is simple, it's the population spread among the states and the relative electoral college votes allocated to the states. As in the case of the rollup with the sales and medical data I showed you earlier, exactly how the data rolls up can reverse the result.

The question, "who won the 2016 presidential election" sounds simple, but it can have several meanings:

- who was elected president

- who got the most votes

- who got the most electoral college votes

The most obvious meaning, in this case, is, "who was elected president". But when you're analyzing data, it's not always obvious what the right question really is.

The root cause of the problem

The problem occurs because we're using an imprecise language (English) to interpret mathematical results. In the sales and medical data cases, we need to define what we want.

In the sales price example, do we mean the overall price or the price for each category? The contest was ambiguous, but to be fair to our CRO, this wasn't obvious initially. Probably, the fairest result is to take the overall price.

For the medical data case, we're probably better off taking the male and female data separately. A similar argument applies for the COVID example. The clarifying question is, what are you using the statistics for? In the drug data case, we're trying to understand the efficacy of a drug, and plainly, gender is a factor, so we should use the gendered data. In the COVID data case, if we're trying to understand the comparative impact of COVID on different races/ethnicities, we need to remove demographic differences.

If this was the 1980s, we'd be stuck. We can't use statistics alone to tell us what the answer is, we'd have to use data from outside the analysis to help us [Pearl]. But this isn't the 1980s anymore, and there are techniques to show the presence of Simpson's paradox. The answer lies in using something called a directed acyclic graph, usually called a DAG. But DAGs are a complex area and too complex for this blog post that I'm aiming at business people.

What this means in practice

There's a very old sales joke that says, "we'll lose money on every sale but make it up in volume". It's something sales managers like to quote to their salespeople when they come asking for permission to discount beyond the rules. I laughed along too, but now I'm not so quick to laugh. Simpson's paradox has taught me to think before I speak. Things can get weird.

Interpreting large amounts of data is hard. You need training and practice to get it right and there's a reason why seasoned data scientists are sought after. But even experienced analysts can struggle with issues like Simpson's paradox and multi-comparison problems.

The red alert danger for businesses occurs when people who don't have the training and expertise start to interpret complex data. Let's imagine someone who didn't know about Simpson's paradox had the sales or medical data problem I've described here. Do you think they could reach the 'right' conclusion?

The bottom line is simple: you've got to know what you're doing when it comes to analysis.