There are lots of chart types, but if you want to lie or mislead people, the best chart to use is the pie chart. I’m going to show you how to distort reality with pie charts, not so you can be a liar, but so you know never to use pie charts and to choose more honest visualizations.

Let's start with the one positive thing I know about pie charts: they're called camembert charts in France and cake charts in Germany. On balance, I prefer the French term, but we're probably stuck with the English term. Unlike camembert, pie charts often leave a bad taste in my mouth and I'll show you why.

Take a look at the pie chart below. Can you put the six slices in order from largest to smallest? What percentages do you think the slices represent?

Here’s how I’ve misled you:

- Offset the slices from the 12 o’clock position to make size comparison harder. I've robbed you of the convenient 'clock face' frame of reference.

- Not put the slices in order (largest to smallest). Humans are bad at judging the relative sizes of areas and by playing with the order, I'm making it even harder.

- Not labeled the slices. This ought to be standard practice, but shockingly often isn't.

| Gray | 20.9 |

| Green | 17.5 |

| Light blue | 16.8 |

| Dark blue | 16.1 |

| Yellow | 15.4 |

| Orange | 13.3 |

How close were you? How good was my attempt to deceive you?

Let’s use a bar chart to represent the same data.

I've read guidance that suggests you should only use a pie chart if you're showing two quantities that are obviously unequal. This gives the so-called pac-man pie charts. Even here, I think there are better representations, and our old-friend the bar chart would work better (albeit less interestingly).

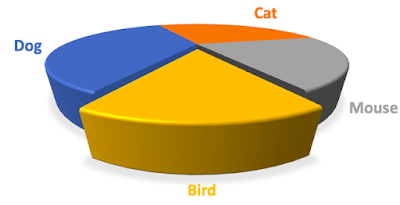

Now let’s look at the king of deceptive practices, the 3d pie chart. This one is great because you can thoroughly mislead while still claiming to be honest. I’m going to work through a short deceptive example.

Let’s imagine there are four political parties standing in an election. The percentage results are below.| Dog | 36 |

| Cat | 28 |

| Mouse | 21 |

| Bird | 15 |

You work for Bird, which unfortunately got the lowest share of the vote. Your job is to deceive the electorate into thinking Bird did much better than they did.

You can obscure the result by showing it as a pie chart without number labels. You can even mute the opposition colors to fool the eye. But you can go one better. You can create a 3d pie chart with shifted perspective and 'point explosion' using the data I gave above like so.

Here's what I did to create the chart:

- Took the data above as my starting point and created a pie chart.

- Rotated the chart so my slice was at the bottom.

- Made the pie chart 3d.

- Changed the perspective to emphasize my party.

- Used 'point explosion' to pull my slice out of the main body of the chart to emphasize it even more.

- Used shading.

This now makes it look like Bird was a serious contender in the election. The fraction of the chart area taken up with the Bird party’s color is completely disproportionate to their voter share. But you can claim honesty because the slice is still the correct proportion if the chart was viewed from above. If challenged, you can turn it into a technical/academic debate about data visualization that will turn off most people and make your opponents sound like they’re nit-picking.

You don’t have to go this far to mislead with a pie chart. All you have to do is increase the cognitive burden to interpret a chart. Some, maybe even all, of your audience might not spot what you’re trying to hide because they’re in a hurry. You can mislead some of your audience all of the time.

I want to be clear, I'm telling you about these deceptive practices so you can avoid them. There are good reasons why honest analysts don’t use pie charts. In fact, I would go one stage further; if you see a pie chart, be on your guard against dishonesty. As one of my colleagues used to say, ‘friends don’t let friends use pie charts’.