Why should you care about probability distributions?

Using the wrong probability distribution can be extremely expensive for businesses:

- for businesses using machinery (factories, vehicles, aircraft, etc.), it can lead to parts being changed too frequently or too infrequently

- for businesses relying on returning customers, it can lead to substantial under or over-estimates of revenue and/or targeting the wrong customers with promotions

- for businesses forecasting future sales by territory and/or product, it can lead to poor territory allocation or poor product resource allocation.

Given that it's so important, what is a probability distribution, and what are some examples?

What's a probability distribution?

At its simplest, a probability distribution tells you how likely an outcome is given some input. For example, how is sales probability distributed by price, or how likely is a component to fail in the next month?

If something is certain to occur, the probability is 1, if it's certain not to occur, the probability is zero. Let's imagine a component lasts a maximum of 6 months before failure. Our probability distribution might show the probability of failure on days 1 to 180. The sum of all failure probabilities for all days must sum to 1.

In the real world, data is noisy and we don't expect real data to exactly follow theoretical distributions, but given enough data, the match should be close enough for us to reason about what's going on.

Uniform distribution - gambling and manufacturing

If the probability is the same for all input values, the distribution is uniform.

Let's imagine we're manufacturing candy, and we want to have equal numbers of red, blue, green, black, and white sweets in a packet. In theory, here's what we should observe.

But in reality, there's random noise so we might see something like this below. We can quantify the difference between the expected distribution and the actual distribution, which tells us something about the variability in the manufacturing process.

The uniform distribution also occurs in gambling, for example, lotteries or dice games.

Reading more

Uniform distribution description by NIST

Binomial distribution - pass/fail and conversion

Each customer who comes into a store or who visits a website will either buy or not buy, which we can turn into a conversion rate. We can model these kinds of pass/fail processes using the binomial distribution. Here's the probability distribution.

The binomial distribution shows us the probability of measuring different results given an underlying 'truth'. Let's imagine the 'true' conversion rate was 0.04, we might not measure 0.04 due to sampling error, instead, we might measure 0.045 or 0.055, depending on how many samples we take. It's important to understand what this means:

- There is uncertainty in our measurement.

- The smaller the sample, the bigger the uncertainty.

Although many technical people understand this, most non-technical people do not, which can lead to tension.

Reading more

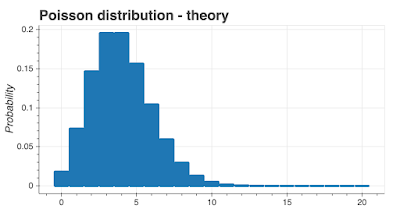

Poisson distribution - waiting in line

Imagine you're a bank serving customers with ATMs at a location. ATMs are expensive, but you don't want to keep people waiting in long lines to do their transactions, it's bad for business. So how do you balance the cost of an ATM against its use? By modeling how many people are using the ATM over a time period.

It turns out, the number of people who visit an ATM over a time period can be modeled using the Poisson distribution, which I've shown below. This gives us a way of assessing how much variation there might be in usage and therefore how many machines we might want to install.

The Poisson distribution is often used to model counting processes. It's very attractive because it has an unusual feature, the standard deviation for the distribution is \(\sqrt{\gamma}\) where \(\gamma\) is the mean. Unfortunately, this property makes it a little too attractive; it's sometimes used when it shouldn't be.

Reading more

The Poisson Distribution and Poisson Process Explained

Exponential distribution

How long does a car battery last? How long do phone calls last? When will the next earthquake occur? These durations typically follow the exponential distribution (which is strongly related to the Poisson distribution). I've shown this distribution below.

Reading more

Power law distribution - finding fraud

How are incomes distributed in a population? How might you find fraud in the pattern of digits in expenses? It turns out, the distribution of the first digits in invoices follows a power-law distribution. The chart below shows a generic power-law distribution - for fraud detection, it's 'flipped'.

Reading more

Normal distribution - almost everywhere, but not quite

What's the probability distribution for male soldiers' chest measurements? How are the results of A/B tests distributed? What about the distribution of measurement errors? All these, and many, many more follow the normal distribution, which is also called the Gaussian distribution or the bell curve. If you only learn one distribution, this is the one to learn.

The properties of this distribution are extremely well-known, and every student of statistics and probability theory will know them. It's ubiquitous because of something called the Central Limit Theorem, which, simplifying a great deal, says that the sum of samples from any distribution follows a normal distribution.

Because it's everywhere, for some people, it's the only distribution they know. Like the old saying goes, if you only have a hammer, every problem is a nail. This distribution can be over-used, with bad consequences.

Here's the distribution. It ought to look familiar.

Reading more

Lognormal distribution

How long do visitors spend on web pages? What about the distribution of internet traffic? Or the distribution of city sizes? These all follow a log-normal distribution that looks like the example below. The lognormal distribution is quite common in business.

Note the 'fat tail' or 'long tail' on the right-hand side. Many businesses have been caught out because they assumed sales or market risk followed a normal distribution when in fact they followed a lognormal distribution.

There's a variation of the Central Limit Theorem that yields log-normal distributions instead of normal distributions.

Reading more

Other distributions

There are lots and lots of different distributions. I saw a list of 90 the other day. Almost all of them are esoteric and apply in a very limited set of cases. You don't have to know all of them but you should be aware that choosing the right distribution is important to make the correct estimates. The distributions I've listed in this blog post are probably the most important, and you should know them and their properties.

As you asked nicely, here is a list of some distributions.

Continuous or discrete - shaken or stirred?

Some quantities are discrete and some are continuous. A discrete quantity is something like a sales territory (e.g. Germany, Ireland, Spain) or customer count (you can't have 0.5 of a customer). A continuous quantity can take any value, for example, speed can be 45.2 kph, 120.01 kph, and so on. Some distributions apply to both continuous and discrete, and some apply only to continuous or discrete. To muddy the waters, sometimes continuous distributions are used to approximately model discrete quantities.

Business examples

Vehicles

Imagine you're running a delivery vehicle fleet. You need to keep your vehicles on the road, but you need to keep an eye on maintenance costs. You decide to use math to guide your decisions, so you work out the average lifetime for different components. You have two components A and B with the same lifetimes in miles. If either component fails, you have to tow the vehicle, which is very expensive.

- Component A. Lifetime is 150,000 miles.

- Component B. Lifetime is 150,000 miles.

A vehicle comes in for maintenance with 149,000 miles on the odometer. Should you replace components A and B?

As you might expect, there's a gotcha. Without knowing the probability distribution for failures, we can't make these decisions. For example, a windshield might have a uniform failure rate distribution, with the probability of failure for miles 1-100 the same as the probability of failure for miles 100,000-100,100. A clutch may have a failure rate that increases with mileage, the probability of failure at miles 100,000-100,100 being much higher than the probability of failure at miles 0-100. Because we know what a clutch and a windshield are, we might decide to replace the clutch and leave the windshield. But what if A and B were a serpentine belt and a heat shield?

The only way to make rational decisions is to understand what distribution the probability of failure follows, which may well be very different for different components (e.g. car seats vs. tires).

Marketing

A new analyst is studying the market for luxury goods in Germany. They have partial data for the fraction of the population that have a certain income. Using what they have, they assume their data is normally distributed and they make a forecast for the fraction of the population that will have an income high enough to afford luxury items. Do you think their forecast will be too low, just right, or too high?

Incomes are usually log-normally distributed, so the analyst, in this case, has chosen the wrong distribution. Because the lognormal has a very long right tail, the analyst's estimate is likely to be an underestimate and may be substantially out. A competitor might not make the same mistake.

Takeaways

I've interviewed people who claim data science on their resumes, but only know the normal distribution. If you assume your data is normal, when in reality it's log-normal or Poisson, things are going to go badly wrong for you. Any analyst in business needs to be very comfortable with different distributions and needs to know which may be applicable and when.