I can't talk to you all

Every week, I get several contacts on LinkedIn from students looking for their first job in Data Science. I'm very sympathetic, but I just don't have the time to speak to people individually. I do want to help though, which is why I put together this blog post. I'm going to tell you what hiring managers are looking for, the why behind the questions you might be asked, and what you can do to stand out as a candidate.

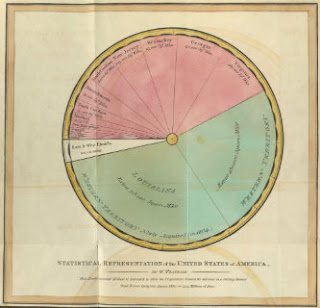

(Chiplanay, CC BY-SA 4.0, via Wikimedia Commons)

Baseline expectations

I don't expect new graduates to have industry knowledge and context, but I do expect them to have a basic toolkit of methods and to know when to apply them and when not to. In my view, the purpose of completing a postgraduate degree is to give someone that toolkit; I'm not going to hire a candidate who needs basic training when they've just completed a couple of years of study in the field.

Having said that, I don't expect perfect answers, I've had people who've forgotten the word 'median' but could tell me what they mean and that's OK, everyone forgets things under pressure. I'm happy if people can tell me the principles even if they've forgotten the name of the method.

The goal of my interview process is to find candidates who'll be productive quickly. Your goal as a candidate is to convince me that you can get things done quickly, right, and well.

Classroom vs. the real world

In business, our problems are often poorly framed and ambiguous, it's very rare that problems look like classroom problems. I ask questions that are typical of the problems I see in the real world.

I usually start with simple questions about how you analyze a small data set with lots of outliers. It's a gift of a question; anyone who's done analysis themselves will have come across the problem and will know the answer, it's easy. Many candidates give the wrong answers, with some giving crazily complex answers. Of course, sales and other business data often have small sample sizes and large outliers.

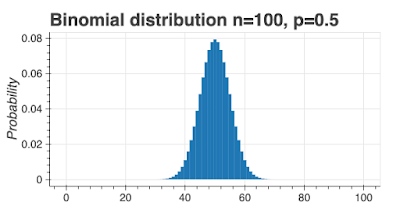

My follow-up is usually something more complex. I sometimes ask about detecting bias in a data set. This requires some knowledge of probability distributions, confidence intervals, and statistical testing. Bear in mind, I'm not asking people to do calculations, I'm asking them how they would approach the problem and the methods they would use (again, it's OK if they forget the names of the methods). This problem is relevant because we sometimes need to detect whether there's an effect or not, for example, can we really increase sales through sales training? Once again, some candidates flounder and have no idea how to approach the problem.

Testing knowledge and insight

I sometimes ask how you would tell if two large, normally distributed data sets are different. This could be an internet A/B test or something similar. Some candidates ask how large the data set is, which is a good question; the really good candidates tell me why their question is important and the basis of it. Most candidates tell me very confidently that they would use the Student-t test, which isn't right but is a sort-of OK answer. I've happily accepted an answer along the lines of "I can't remember the exact name of the test, but it's not the Student-t. The test is for larger data sets and involves the calculation of confidence intervals". I don't expect candidates to have word-perfect answers, but I do expect them to tell me something about the methods they would use.

Often in business, we have problems where the data isn't normally distributed so I expect candidates to have some knowledge of non-normal distributions. I've asked candidates about non-normal distributions and what kind of processes give them. Sadly, a number of candidates just look blank. I've even had candidates who've just finished Master's degrees in statistics tell me they've only ever studied the normal distribution.

I'm looking for people who have a method toolkit but also have an understanding of the why underneath, for example, knowing why the Student-t test isn't a great answer for a large data set.

Data science != machine learning

I've found most candidates are happy to talk about the machine learning methods they've used, but when I've asked candidates why they used the methods they did, there's often no good answer. XGBoost and random forest are quite different and a candidate really should have some understanding of when and why one method might be better than another.

I like to ask candidates under what circumstances machine learning is the wrong solution and there are lots of good answers to this question. Sadly, many candidates can't give any answer, and some candidates can't even tell me when machine learning is a good approach.

Machine learning solves real-world problems but has real-world issues too. I expect candidates with postgraduate degrees, especially those with PhDs, to know something about real-world social issues. There have been terrible cases where machine learning-based systems have given racist results. An OK candidate should know that things like this have happened, a good candidate should know why, and a great candidate should know what that implies for business.

Of course, not every data science problem is a machine learning problem and I expect all candidates at all levels to appreciate that and to know what that means.

Do you really have the skills you claim?

This is an issue for candidates with several years of business experience, but recent graduates have to watch out for it too. I've found that many people really don't have the skills they claim to have or have wildly overstated their skill level. I've had candidates claim extensive experience in running and analyzing A/B tests but have no idea about statistical power and very limited idea of statistical testing. I've also had candidates massively overstate their Python and SQL skills. Don't apply for technical positions claiming technical skills you really don't have; you should have a realistic assessment of your skill level.

Confidence != ability

This is more of a problem with experienced candidates but I've seen it with soon-to-be graduates too. I've interviewed people who come across as very slick and very confident but who've given me wildly wrong answers with total confidence. This is a dangerous combination for the workplace. It's one thing to be wrong, but it's far far worse to be wrong and be convincing.

To be fair, sometimes overconfidence comes from a lack of experience and a lack of exposure to real business problems. I've spoken to some recent (first-degree) graduates who seemed to believe they had all the skills needed to work on advanced machine learning problems, but they had woefully inadequate analysis skills and a total lack of experience. It's a forgivable reason for over-confidence, but I don't want to be the person paying for their naïveté.

Don't Google the answer

Don't tell me you would Google how to solve the problem. That approach won't work. A Google search won't tell you that not all count data is Poisson distributed or why variable importance is a useful concept. Even worse, don't try and Google the answer during the interview.

Don't be rude, naive, or stupid

A receptionist once told me that an interview candidate had been rude to her. It was an instant no for that candidate at that point. If you think other people in the workplace are beneath you, I don't want you on my team.

I remember sitting in lecture theaters at the start of the semester where students would ask question after question about the exams and what would be in them. I've seen that same behavior at job interviews where some candidates have asked an endless stream of questions about the process and what they'll be asked at what stage. They haven't realized that the world of work is not the world of education. None of the people who asked questions like this made it passed the first round or two, they just weren't ready for the work place. It's fine to ask one or two questions about the process, but any more than that shows naivete.

I was recruiting data scientists fresh out of Ph.D. programs and one candidate, who had zero industrial experience, kept asking how quickly they could be promoted to a management position. They didn't ask what we were looking for in a manager, or the size of the team, or who was on it, but they were very keen on telling me they expected to be managing people within a few months. Guess who we didn't hire?

Be prepared

Be prepared for technical questions at any stage of the interview process. You might even get technical questions during HR screening. If someone non-technical asks you a technical question, be careful to answer in a way they can understand.

Don't be surprised if questions are ambiguously worded. Most business problems are unclear and you'll be expected to deal with poorly defined problems. It's fine to ask for more details or for clarification.

If you forget the name of a method, don't worry about it. Talk through the principle and show you understand the underlying ideas. It's important to show you understand what needs to be done.

If you can, talk about what you've done and why you did it. For example, if you've done a machine learning project, use that as an example, making sure you explain why you made the project choices you did. Work your experience into your answers and give examples as much as you can.

Be prepared to talk about any technical skills you claim or any area of expertise. Almost all the people interviewing you will be more senior than you and will have done some of the same things you have. They may well be experts.

If you haven't interviewed in many places, or if you're out of practice, role-playing interviews should help. Don't choose people who'll go easy on you, instead chose people who'll give you a tough interview, ideally tougher than real-world interviews.

Most interviews are now done over Zoom. Make sure your camera is on and you're in a good place to do the interview.

Stand out in a good way

When you answer technical questions, be sure to explain why. If you can't answer the question, tell me what you do know. Don't just tell me you would use the Wilson score interval, tell me why you would use it. Don't just tell me you don't know how to calculate sample sizes, tell me how you would go about finding out and what you know about the process.

I love it when a candidate has a GitHub page showing their work. It's fine if it's mostly class projects, in fact, that's what I would expect. But if you do have a GitHub page, make sure there's something substantive there and that your work is high quality. I always check GitHub pages and I've turned down candidates if what I found was poor.

Very, very few candidates have done this, but if you have a writing sample to show, that would be great. A course essay would do, but obviously a great one. You could have your sample on your GitHub page. A writing sample shows you can communicate clearly in written English, which is an essential skill.

If you're a good speaker, try and get a video of you speaking up on YouTube and link it from your GitHub page (don't do this if you're bad or just average). Of course, this shows your ability to speak well.

For heaven's sake, ask good questions. Every interviewer should leave you time to ask questions and you should have great questions to ask. Remember, asking good questions is expected.

What hiring managers want

We need people who:

- have the necessary skills

- will be productive quickly

- need a minimal amount of hand-holding

- know the limitations of their knowledge and who we can trust not to make stupid mistakes

- can thrive in an environment where problems are poorly defined

- can work with the rest of the team.

Good luck.

.jpg)

.jpg)

.jpg)

.jpg)