Tossing and turning

A few months ago, someone commented on one of my blog posts and asked how you work out if a coin is biased or not. I've been thinking about the problem since then. It's not a difficult one, but it does bring up some core notions in probability theory and statistics which are very relevant to understanding how A/B testing works, or indeed any kind of statistical test. I'm going to talk you through how you figure out if a coin is biased, including an explanation of some of the basic ideas of statistical tests.

The trial

A single coin toss is an example of something called a Bernoulli trial, which is any kind of binary decision you can express as a success or failure (e.g. heads or tails). For some reason, most probability texts refer to heads as a success.

We can work out what the probability is of getting different numbers of heads from a number of tosses, or more formally, what's the probability \(P(k)\) of getting \(k\) heads from \(n\) tosses, where \(0 < k ≤ n\)? By hand, we can do it for a few tosses:

| Number of heads (k) | Combinations (n) | Count | Probability |

| 0 | TTT | 1 | 1/8 |

| 1 | HTT THT TTH | 3 | 3/8 |

| 2 | THH HTH HHT | 3 | 3/8 |

| 4 | HHH | 1 | 1/8 |

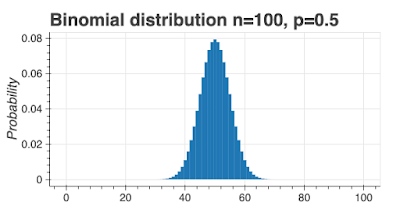

If we plot this function for an unbiased coin (\(p=0.5\)), where \(n=100\), and \(0 < k ≤ n\), we see this probability distribution:

This is called a binomial distribution and it looks a lot like the normal distribution for large (\(> 30\)) values of \(n\).

I've created two charts below for an unbiased coin (\(p=0.5\)), one with \(n=20\) and one with \(n=40\). Obviously, the \(n=40\) chart is narrower, which is easier to see using the score as the x-axis.

As an illustration of what these charts mean, I've colored all scores 0.7 and higher as red. You can see the red area is bigger for \(n=20\) than \(n=40\). Bear in mind, the red area represents the probability of a score of 0.7 or higher. In other words, if you toss a fair coin 20 times, you have a 0.058 chance of seeing a score of 0.7 or more, but if you toss a fair coin 40 times, the probability of seeing a 0.7 score drops to 0.008.

These charts tell us something useful: as we increase the number of tosses, the curve gets narrower, meaning the probability of getting results further away from \(0.5\) gets smaller. If we saw a score of 0.7 for 20 tosses, we might not be able to say the coin was biased, but if we got a score of 0.7 after 40 tosses, we know this score is very unlikely so the coin is more likely to be biased.

Thresholds

Let me re-state some facts:

- For any coin (biased or unbiased) any score from 0 to 1 is possible for any number of tosses.

- Some results are less likely than others; e.g. for an unbiased coin and 40 tosses, there's only a 0.008 chance of seeing a score of 0.7.

We can use probability thresholds to decide between biased and non-biased coins. We're going to use a threshold (mostly called confidence) of 95% to decide if the coin is biased or not. In the chart below, the red areas represent 5% probability, and the blue areas 95% probability.

Here's the idea to work out if the coin is biased. Set a confidence value, usually at 0.05. Throw the coin \(n\) times, record the number of heads and work out a score. Draw the theoretical probability chart for the number of throws (like the one I've drawn above) and color in 95% of the probabilities blue and 5% red. If the experimental score lands in the red zones, we'll consider the coin to be biased, if it lands in the blue zone, we'll consider it unbiased.

This is probabilistic decision-making. Using a confidence of 0.05 means we'll wrongly say a coin is biased 5% of the time. Can we make the threshold higher, could we use 0.01 for instance? Yes, we could, but the cost is increasing the number of trials.

To simplify, binom_test has three arguments:

- x - the number of successes

- n - the number of samples

- p - the hypothesized probability of success

Let's see how this works with a confidence of 0.05. Let's take the case where we have 200 coin tosses and 140 (70%) of them come up heads. We're hypothesizing that the coin is fair, so \(p=0.5\).

the p-value we get is 1.5070615573524992e-08 which is way less than our confidence threshold of 0.05 (we're in the red area of the chart above). We would then reject the idea the coin is fair.

This time, the p-value is 0.10363903843786755, which is greater than our confidence threshold of 0.05 (we're in the blue area of the chart), so the result is consistent with a fair coin (we fail to reject the null).

What if my results are not significant? How many tosses?

Let's imagine you have reason to believe the coin is biased. You throw it 200 times and you see 115 heads. binom_test tells you you can't conclude the coin is biased. So what do you do next?

The answer is simple, toss the coin more times.

The formulae for the sample size, \(n\), is:

where \(\sigma\) is the standard error.

Here's how this works in practice. Let's assume we think our coin is just a little biased, to 0.55, and we want the standard error to be \(\pm 0.04\). Here's how many tosses we would need: 154. What if we want more certainty, say \(\pm 0.005\), then the number of tosses goes up to 9,900. In general, the bigger the bias, the fewer tosses we need, and the more certainty we want the more tosses we need.

If I think my coin is biased, what's my best estimate of the bias?

Let's imagine I toss the coin 1,000 times and see 550 heads. binom_test tells me the result is significant and it's likely my coin is biased, but what's my estimate of the bias? This is simple, it's actually just the mean, so 0.55. Using the statistics of proportions, I can actually put a 95% confidence interval around my estimate of the bias of the coin. Through math I won't show here, using the data we have, I can estimate the coin is biased 0.55 ± 0.03.

Is my coin biased?

This is a nice theoretical discussion, but how might you go about deciding if a coin is biased? Here's a step-by-step process.

- Decide on the level of certainty you want in your results. 95% is a good measure.

- Decide the minimum level of bias you want to detect. If the coin should return heads 50% of the time, what level of bias can you live with? If it's biased to 60%, is this OK? What about biased to 55% or 50.5%?

- Calculate the number of tosses you need.

- Toss your coin.

- Use binom_test to figure out if the coin deviates significantly from 0.5.

.jpg/640px-Watkin_Tree_stump_(480420361).jpg)